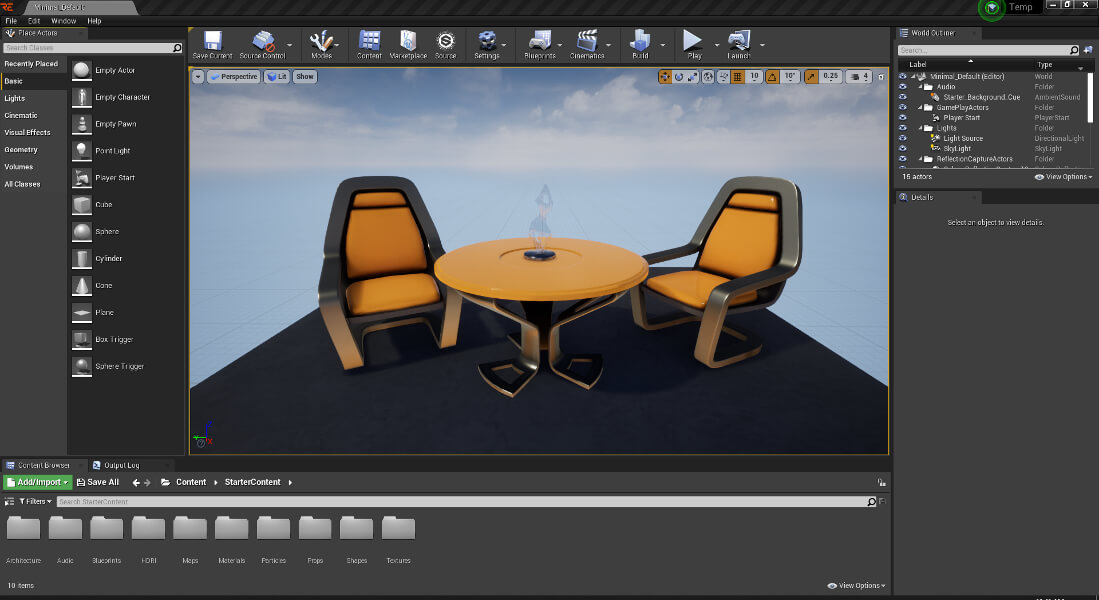

Set construction in the traditional sense is no longer used in many film productions and television programmes, or only to a very limited extent. VR makes it possible: instead of having to build elaborate sets, the shape, colour and size of objects can now be adjusted with just a few mouse clicks.

VR therefore offers a wide range of advantages:

- Multiple use of studios for different programmes

- Reorganisation of the set without interrupting transmission

- No limits to creativity

- Cost and space benefits

- and much more.

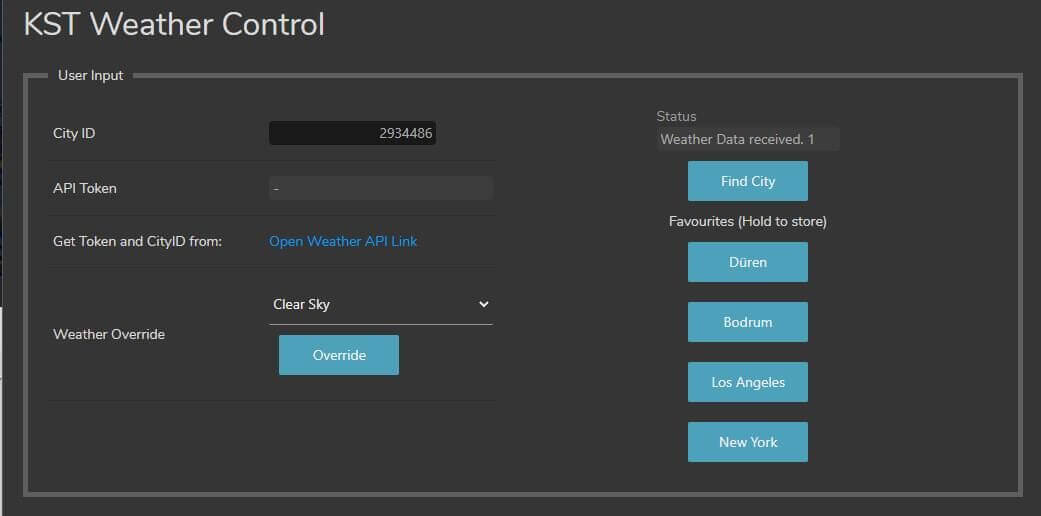

In order to be able to use a VR environment in the broadcast sector and also in live operation, many technical components have to work together. However, some of them are particularly crucial, such as camera tracking – at least if the camera is to be moved. Without camera tracking, it is not possible to create a perspective-adjusted image and the image produced would therefore not be usable.

The term tracking refers to a data stream that contains all the spatial and rotational coordinates of the camera as well as the state of the optics. With this data, the rendering machine is now able to synchronise the perspective of the virtual camera with that of the real camera at any time. Precise tracking is therefore essential for the illusion of a virtual studio.

There are various tracking methods for determining the camera position in the room:

- Robotic or internal tracking

- External Tracking

Robotic / internal tracking

If robotic camera systems are used for production, no additional external tracking solution is required, as the spatial data is captured anyway thanks to the internal tracking of the respective system. With so-called robotic or internal tracking, precise position detection is possible via the encoders of the mechanical system.

A major advantage of this method is its high precision and insensitivity to external influences. As the encoders read out the physical position directly and do not have to “see” and calculate it on the basis of optical analyses, as external systems do, there is no, or at least considerably reduced, “jittering” – i.e. wobbling – of the coordinates. There is also no additional delay caused by the tracking system. Depending on the robotics control software, it is even possible to control the robotics so precisely that the tracking can be pre-calculated and sent before the actual movement, which can almost completely eliminate the production delay caused by the rendering time of the graphics. This is particularly useful when dealing with LED walls in a virtual production.

One disadvantage of internal tracking is that it ends with the robotics or mechanical system and, unlike most external methods, does not allow absolutely free movement in space. A robot or PTZ camera can record its own movements and thus track them, but if the system is moved by hand from the outside, for example, the mechanical encoders do not receive this information and the tracking is not updated. With robotic tracking, any movement of the system must take place via recorded axes. If the robot is also to move in space, it must therefore move on a rail equipped with encoders or similar.

External Tracking

External tracking is therefore intended for hand-held cameras or pedestals that can move freely in space and can be carried out optically or via sensors, e.g. using infrared.

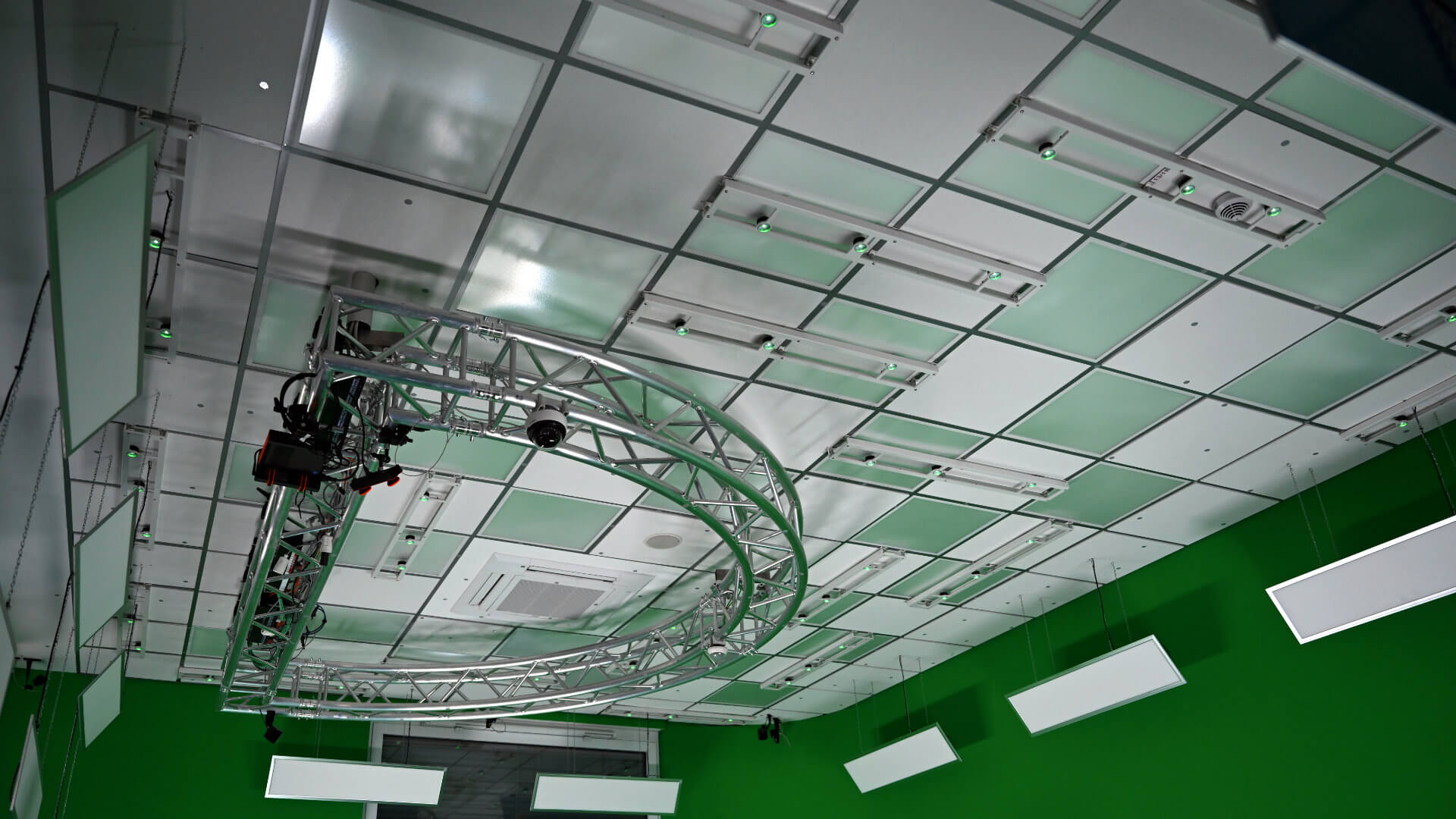

A common optical tracking system is Antilatency in combination with the Eztrack HUB from Miraxyz. This widely used solution works with position LEDs on the ceiling, which are attached in a unique pattern. This is later recognised by a sensor mounted on the camera, read out, transferred to the three-dimensional space as coordinates and transmitted to the rendering machines. In this system, the “active” component is the LEDs on the studio ceiling.

Another solution is the Vive solution known from VR glasses, in which base stations are installed in the opposite corners of the room that track the tracker in the room via infrared. The tracker itself is a complex geometric shape to which infrared reflectors are attached. The “active” components here are the base stations, which both emit an IR “beam” and see the reflection points of the tracker and thus locate it. As with Antilatency, the tracking technology, which actually comes from the consumer sector, is made broadcast-capable by the Eztrack HUB.

In addition to the comparatively inexpensive options presented so far, there are also higher-quality and more expensive methods, such as Mo-Sys Startracker or Optitrack.

With Mo-Sys, reflector points are attached to the ceiling in a random pattern and an upward-facing IR camera including IR spotlight is attached to the camera. In contrast to Antilatency, the active component is therefore on the camera.

With Optitrack, IR cameras are placed in the corners of the room and IR LEDs are placed on the camera to be tracked in a specific arrangement, which are seen and localised by the IR cameras.

Basically, almost all external tracking systems for real-time use are based on some form of infrared light and differ only in price, placement of the active component, precision and range. All of these systems have problems with coverage and, since they work with infrared, direct daylight.

For the sake of completeness, talent tracking should also be mentioned. With talent tracking, it is possible to track the presenter and their movements. This allows him or her to move naturally in the virtual world and interact with virtual objects or be automatically tracked by cameras. You can use similar or even the same tracking systems as for camera tracking, but there are also real-time-capable alternatives such as Zero Density’s Traxis Talent, which work without a tracker using optical image analysis.

In conclusion, it is clear that all tracking systems have their advantages and disadvantages, so there is no “ultimate” system that stands out. Instead, the environmental factors are decisive when purchasing a suitable system.

Do you have any questions or would you like to see the various tracking systems live? Then give us a call on +49 2421 55 890 and visit our Innovation Centre.

Your contact

Felix Moschkau

CTO

felix.moschkau@kst-moschkau.eu